If you’re in America (this might be happening elsewhere, too), the world we live in feels a little different. Information flow was previously more limited and therefore controlled: the general consensus was familiar and comfortable. Opinions and understandings were a little more homogenous.

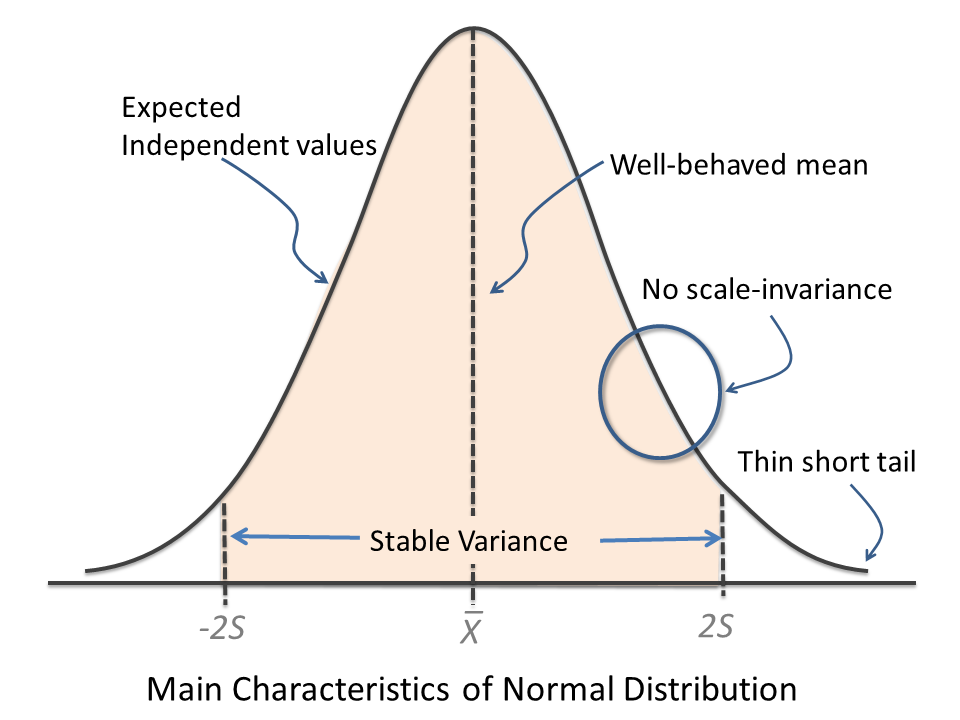

It felt like most of the time, we spent our days in the fat belly of the bell curve. Or at least those screaming in the thin tail were easier to ignore.

It gradually changed to where the thin tail started to look more like a fat tail. But that was OK — preferable if you ask me. We need to challenge our assumptions and let go of what we believe, should better information present itself. We got better, faster, at Basian updating. We might chalk it up to coincide with the explosion of information availability, the value placed on forward-thinkers, and a movement of innovators/disrupters.

And then, which seemed pretty sudden, it felt like our bell curve with fat tails started looking more like a dumbbell. There are probably multiple reasonable causes, but the finger has largely been pointed to echo chambers created through search algorithms. The metrics used are largely those revolving around driving “clicks.” The thing that seems to get those clicks is either that which confirms our biases or the polar opposite. What I want to believe vs what I want to argue against.

That information flow, which more people are able to contribute to (think social media over “news at 10”), is now controlled by those making ad revenue through clicks. Incentives drive action.

And as we spend more and more time searching for confirmation on our little dopamine machines (phones/tablets/laptops), we might find ourselves in a very dangerous training groud. There’s hypertrophy of polarizing viewpoints.

We certainly see this fat belly to fat tail to the dumbbell curve taking place in our politics (who’s getting ready to ignore their facebook channel in 2020 besides me?).

I’m going out on a limb here, but it feels like a lot of our thoughts and actions mimic the same divergence.

The Danger of False Dichotomy During Surgery…

For the sake of our patients, we really need to reset the way we think/communicate/behave when the stakes are high. The false dichotomy fallacy is one of those mental shortcuts we should really try our best to fend off. In areas of complexity, like human neurophysiology during surgery, we need to think beyond “either, or.”

In practice, it might look something like this:

Step 1: I can’t get motors. It’s either the stimulation or anesthesia.

Step 2: I already turned up my stimulation.

Step 3: You need to turn down your propofol.

Now, that’s a pretty simple scenario where it would be easy to say, “ya, those one-trick ponies don’t belong in the OR!”

While true, it’s not the overall capabilities I’m trying to call out. If pushed for more suggestions, or asked in a different setting, the person falling prey to thinking black or white would most likely be able to come up with a couple of other things to consider. What I’d like to point out are the unwanted behaviors all of us are prone to stumble over in specific moments.

And so are all the other surgical team members.

This logical fallacy, in particular, is the inappropriate creation of an artificial scenario where there are no more than 2 options available. It’s black or white, with us or against us, or it’s me or you (and it ain’t me).

Example of a Team Getting It Right…

The reason for me even writing this post was due to a case study I read. I typically go into case studies with a couple of assumptions:

- I’m probably going to read about some fringe occurrences that may or may not be linked to the claim.

- It’s probably better for raising more questions than answering any single one. That’s the nature of anecdotal evidence.

- It’s still science, so it is one more step, or data point, closer on the slow march towards the “truth.”

- There might be some other story to find than the main one being told. It can act as a window of the author’s (probably idealized) view of how they operate.

Side note (I secretly like case studies. I’m aware of their limitations, but I’d be a big fan of more of our community getting involved in publishing these.)

This case study was titled:

Somatosensory evoked potential loss due to intraoperative pulse lavage during spine surgery: case report and review of signal change management.

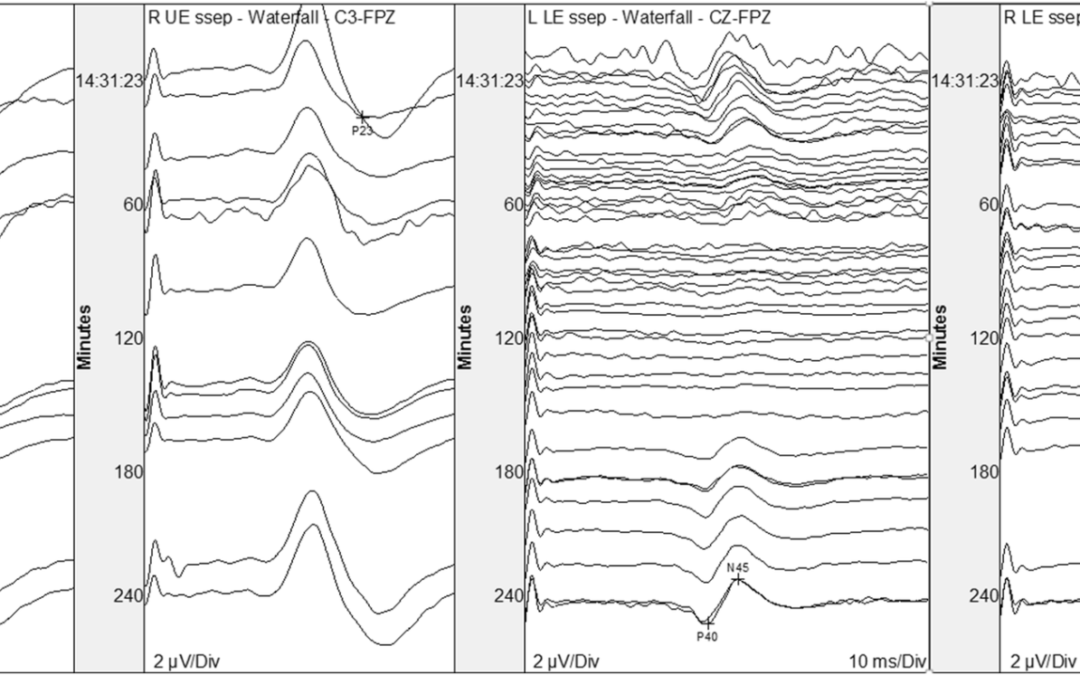

In the report, there was an uncommon change during a relatively benign part of the surgery (Unilateral LE SSEP change well after instrumentation and during irrigation in a lumbar surgery). It was one of those instances prone to bring out one’s tendency for seeing things as black or white. Maybe even some cheap tactics of finger-pointing had a chance to “win” in conversations.

That’s not the direction things went after the team was informed of an alarm. The write up did a pretty good job of going through some of the potential causes and why they were able to rule things in/out. Options were explored, experts were asked to weigh in, and logic drove their decision making.

The main story to be told was the cautionary tale of pulse lavage with cold irrigation. It didn’t confidently answer any question per se, but does help raise questions like, “we know that less amount of pressure on the spinal cord is needed when blood saturation drops but does lower temperature cause the same effects when seen with these “microinsults? Or does it only facilitate a conduction block and not an injury? Or was the temperature alone enough to cause a conduction block in this case, independent of the pulse lavage?”

But the other story told is that of a collaborative team working through a decision process with key individuals responsible for adding value in their respective fields. The story unfolds nicely in chronological order and allows us to visualize it as if in real time.

And that’s a valuable lesson, especially for all/most of us living in our current polarizing social climate, exposing some of the downsides of our tribalism.

One Last Thing…

As part of your own team (or even in the comments section below), you can go through case studies like this from which to learn and model.

Of course, not everything published is best practice. And there’s also plenty to learn by keeping a critical eye and avoid unwavering appeal to authority.

If you wanted to give a recommendation to this team involved in this paper, what might it be after taking a quick glance at their tcMEP stack?